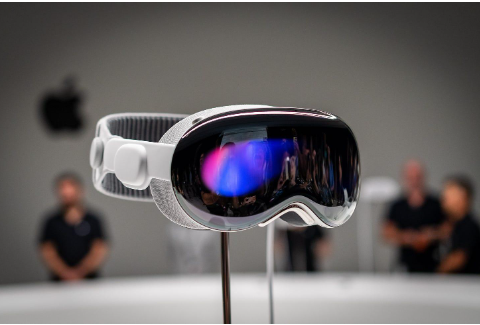

So as far as use cases are concerned, ‘infinite display’, collaboration + knowledge transfer, and live sports/entertainment alone will be enough to drive demand and establish product market fit.

But this is the tip of the iceberg. Just like no one predicted the App Store, and the ensuing explosion of new apps, we can’t predict all the innovation that’s brewing amidst the long tail of Apple developers who are already diving into the AVP SDK & developer docs.

Out of the millions of apps in the app store, a healthy chunk is brain storming as we speak about what their apps could look/feel like in a spatial world. And I can’t wait to see the results…

This is going to ruin humanity!

I beg to differ.

To the contrary, spatial/experiential computing just might be a key ingredient to humanity’s salvation, especially with the advent of AI.

There’s a variety of philosophical and practical reasons why. I’ll just hit my two favorites.

Philosophically, consider all the complex and daunting problems we face in the world. Most of them lack answers, and in our search for solutions, it’s hard to say where to start.

But one place that is hard to refute, and that will certainly help us find the right answers/solutions, is better communication & collaboration; between employees, executive, & scientists. Between countries, companies, and local governments. Between political groups, their leaders, and their polarized constituents.

Poor communication & collaboration sits at the heart of all our issues, causing a lack of empathy, understanding, and ultimately, poor decision making, low alignment, and very little progress.

To illustrate the power of spatial computing for communication & collaboration, I fall back to a section from my essay, ‘How to Defend the Metaverse’.

It quotes one of the cyberspace/metaverse OG's: Terrence McKenna.

McKenna says, "Imagine if we could see what people actually meant when they spoke. It would be a form of telepathy. What kind of impact would this have on the world?"

McKenna goes on to describe language in a simple but eye-opening way, reflecting on how primitive language really is.

He says, "Language today is just small mouth noises, moving through space. Mere acoustical signals that require the consulting of a learned dictionary. This is not a very wideband form of communication. But with virtual/augmented realities, we'll have a true mirror of the mind. A form of telepathy that could dissolve boundaries, disagreement, conflict, and a lack of empathy in the world."

This form of ‘telepathy’… i.e. a higher bandwidth, more visiual form of communication, i.e. the ability to more directly see or experience an idea, an action, a potential future… this will not just benefit human to human communication, but also human to machine. Which brings us to my practical response du jour.

Practically, we need to consider how humans evolve and keep up in the age of AI.

We’re briskly moving from the age of information to the age of intelligence. But intelligence for whom?

Machines are inhaling all of human knowledge. As a result, every person and every company will have the ultimate companion; capable of producing all the answers, all the options, and all the insights…

How do we compete and remain relevant? Or perhaps better said… How do we become a valuable companion to AI in return?

Just like machines leveled up via transformers and neural nets, we too need better ways to consume, analyze, and ‘experience’ information. Especially the information AI’s produce, which will come in droves and a myriad of formats.

AI is going to produce answers, insights, and truth for all kinds of things: new ideas, products, stories, moments in time, scenarios, plans, and my personal favorite; all things that remain abstract and unseen by most; space, stars, planets, the deep sea, the deep forest, the inner workings of the human body & mind, the list goes on.

AI is going to reveal things previously mysterious, complex, and otherwise impossible to fully grasp.

As it does so… how can AI best communicate its findings back to humans? And how can we fully grok, parse through, and become fully empowered to act?

More often than not, our answer back to the AI is going to be, “don’t tell me, damnit, show me”.

Spatial computing will be the ultimate tool for helping AI’s ‘show’, and helping humans ‘know’, ushering in an age of ‘experience’ in tandem with the age of ‘intelligence’.

As a result, humans will be empowered to better remain in the loop; as the final decision maker, fully empowered to add the human touch and tweak the final outcome/output, in a way that only humans know how, i.e. through feeling, intuition, and empathy, a la this essay ‘How to find solace in the age of AI: don’t think, feel’

Tech vs. Tech

In closing, there is one more common concern within this realm that I admittedly don’t have the best answer to. At least not yet. And that is… once we’re ‘in the loop’ with AI, and spending more time ‘in the machine’ with spatial computing… how do we retain the best parts of humanity that are obviously negatively impacted by technology?

Things like our attention and mental health, or our physical movement, social skills, and time in nature.

My prediction is that we’re going to get increasingly good at using tech to combat tech.

Meaning… there are apps and tools that we can build to shape our relationship with tech, negate its afflictions, and build better habits & social connections.

Apple is already doing this today, and I thought it was one of the more compelling parts of the WWDC presentation. They showcased apps for journaling to aid with emotional awareness. Meditation for mindfulness. Fitness & outdoor hobbies of all types, with unique ways to measure, gamify, and socialize/connect with others, boosting motivation & consistency along the way.

I think this trend is going to accelerate over the coming years. It’s already a cottage industry, with startups such as TrippVR for meditation & mental health, and FitXR for VR fitness.

The AVP’s arrival is going to enhance and legitimize these use cases, and over time, shift people’s relationship with technology while reducing the afflictions born of abstracted, ‘flat computing’. Or the afflictions born of boxes tethered walls and TVs (aka: an Xbox or PS5). These current form factors are what keeps kids/people stuck inside, isolated, and socially inept.

In contrast… AR, in its ultimate form, will free kids from the confines of a screen and a living room with an outlet, thrusting them back into nature, back into face to face contact, and back into a world longed for by prior generations. A world of scratched knees from a treasure hunt in the park, of youthful pride from a fort forged in the woods, or of confidence from winning an argument while playing make believe in the backyard.

Except this time, the treasure becomes real, the forts become labyrinths, and the figments of make belief become not so make belief…

Thanks for taking the time to read Evan's essay. Let us know what you think about this perspective. And if you enjoyed this piece, don’t forget to check out more of his essays and subscribe over at MediumEnergy.io. Here are some of our personal favorites:

- How to defend the metaverse

- Finding solace in the age of AI

- The Ultimate Promise of the Metaverse