Today, we’re featuring Part II of Evan Helda’s series, ‘The Fate of Apples Vision Pro’.

Evan Helda is the Principal Specialist for Spatial Computing at AWS, where he does business development and strategy for all things immersive tech: real-time 3D, AR, and VR. Evan also writes a newsletter called Medium Energy, where he explores the impact of exponential technology on the human experience.

If you've not read Part I, we suggest doing so for complete context & appreciation. Here’s the link.

In Part I Evan reflects on his time at the original Meta, an AR startup that was building an Apple Vision Pro competitor, albeit 5 years prior.

Here in Part II, Evan dives into the critiques from Vision Pro skeptics and dissect why they might be wrong.

If you enjoy this piece, we encourage you to check out more of his content over at MediumEnergy.io! You can also follow Evan on X/Twitter: @EvanHelda

----

Have you ever cried in a business setting?

I have.

It was the first time I ever experienced really good AR. And I mean... really, really good. Like, take-your-breath-away, blow-your-mind good.

Needless to say, they were tears of joy.

The sad part? That experience was 5 years ago.

To this day, I still haven't seen anything that comes close. And I've tried it all; just about every headset and every top application

Of course, this experience was just a demo. An absolute Frankenstein of a demo at that. To create something this good, we had to duct tape together the best-in-class components of the AR tech stack. It was kludgy as hell, but it accomplished our goal: to showcase the art-of-the-possible if we could get everything right.

We used the best display system (with the largest field-of-view and highest resolution, aka: the Meta 2); the best hands tracking (a Leap Motion sensor); the best positional tracking (a Vive lighthouse rig, with a Vive controller hot-glued to the top of the headset to track head movement... yeah... like I said, kludgy...); an intuitive interface and custom software via Unity that allowed you to move between 2D creation (using a Dell Canvas to draw a shoe) and 3D consumption (software to 'pull' the 2D shoe out into the world as a 3D object for a design review).

Meta 2 AR headset

Leap Motion hand tracking sensor

Vive Lighthouse Tracking Gear

Dell Canvas for design

But it wasn't just these tech components. We also had some of the world's most talented developers, 3D artists, and UI/UX designers build that demo. And that's the other thing we've been missing as an industry: the world's brightest minds. They just haven't entered this space yet en masse because they know... AR/VR isn’t yet worthy of their talent.

We made this demo for Nike in partnership with Dell (who was reselling our headset). It was a re-creation of the CGI from this two-minute concept video: with the exact same 3D assets, same workflow, and same UI/UX.

Nike designer using Meta 2

Virtual prototype in AR

This Nike video still drives me to this day.

When I put that duct taped contraption on my head the kludginess disappeared. I was captivated by the future. Or rather, my childhood fantasy. Like a wizard at Hogwarts, I was using my voice to summon different design iterations. I was grabbing orbs out of the air, each one representing a different color or texture. These orbs could be dragged with your fingers and dropped on to the 3D model; changing the aesthetic by tossing invisible objects onto other invisible objects.

POV shot of the demo/video

Except they weren't invisible. Not to me. You could see every detail: the stitching, the fabric, the glow of the materials.

I could explain it in more detail, but just watch the video and then... imagine.

Imagine this type of workflow and collaboration, between both humans and AI, allowing us to move from imagination to reality in the blink of an eye.

The video's script poetically says it all:

"It starts with a question, followed by an idea. On how to make things simpler.

Better.

Or more beautiful.

But it’s not just about what it looks like. It’s how it works.

Which means trying... and failing... and trying again.

To be a designer (or creator) means not being bound by the limits of your tools. But instead, being inspired by them; so that you can focus on what only YOU can do; being creative, being curious, and being critical; exploring the union between function and form, until suddenly...

You know.

And when you're ready to share your work, make sure everyone can see... that the world is a little simpler, better, and more beautiful."

I've seen this video over a hundred times. But those words never fail to stir my soul. And with the advent of generative AI, combined with the promise of spatial computing, they’re more poignant than ever.

People sometimes ask me... when will this tech be here? When will we know it’s arrived?

I answer by showing the Nike video. When that experience exists, in the form of a real product, with a real application, in a real production setting... that's when we've arrived.

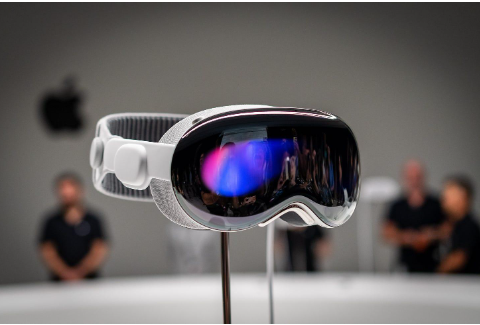

Now, I've yet to try the Apple Vision Pro (AVP). But that's why I'm so excited, and why I think you, dear reader, should be as well. Because the AVP seems to be the industry's FIRST device that will yield an experience of such magical magnitude.

Something that suspends disbelief and blow your mind. Something that compels the world’s top talent to experiment and re-invent human computer interaction.

Apple Vision Pro

But, as I mentioned in Part I, not everyone has the same level of optimism about AVP.

Immediately after the announcement, the Luddites grabbed their pitchforks and the skeptics had a field day.

Listen, I get it... the notion of being inside the computer is strange and some of Apple's portrayals had Black Mirror vibes (which we'll address later). Such objections are nothing new. Every major tech epoch faced similar doubt in droves.

But when you put the primary objections under a microscope, they just don't hold up. Especially over the fullness of time.

As I also said in Part I, I'm obviously biased. But I've done the work to produce an objective lens. And upon analyzing the major objections, I remain convinced: most haters/pundits are woefully wrong, lacking the right perspective, foresight, and an understanding/appreciation for the nuances of Apple's strategy, timing, and approach.

That's not meant to be a knock. Most people’s/pundit’s perspective is just limited, lacking exposure to the tech, the impactful use cases, and the problems they'll address.

In my opinion, everything Apple is doing makes perfect sense: the form factor, the timing, the positioning, the use cases; all of it has been meticulously ruminated, debated, and patiently executed upon.

So before explaining why, let's do a quick recap of the most common objections/concerns:

It's too expensive! (Price)

I don't want to wear that thing on my face (UI/UX)

What’s the point? What is this good for? (Use Cases)

This is going to ruin humanity! (Societal Impact)

While not an exhaustive list, I view these as the ‘big rocks’ in the proverbial jar of eventual truth (aka: Evan’s optimistic opinions). Let’s dive in.

It's too expensive! (Price)

News flash: the Apple Vision Pro is not going to be a commercial success. Everyone knows this. Including Apple. Regardless, Wall Street is going to be disappointed, the critics will say I told you so, and they will all be missing the forest for the trees.

Making money isn't Apple's goal. Nor is it their metric of success.

Their goal is twofold. First, to attack the most challenging barrier: consumer behavior and imagination. Second, to get into the market, learn, and iterate; all in the wake of consumer inspiration and rising sentiment/demand due to a premium/mind blowing UI/UX.

Apple's strategy can be summarized by a tweet Palmer Luckey wrote in 2016:

“Before VR can become something that everyone can afford, it must become something that everyone wants”.

Towards that end... Apple had a choice. They could have waited until the price point was perfect, along with the form factor, the battery power, etc. But are these things their biggest challenge?

No. These things: price, battery, weight, size, etc... they're all bound to be solved by the natural progress of technology. You'd rather be deficient on these vectors, as they will naturally take care of themselves.

Where you can't afford to be deficient is usability, utility, and delight. In other words, it's better to go high end and be super compelling, than low end as another 'me too' device competing in a red ocean, doomed to gather dust (just like every other affordable device). That device is just not worth making, and as Luckey alluded, it won't make AR/VR something everyone wants.

Thus, Apple chose to reach 5 years into the future, spare no expense, and pull next-gen technology into the present. Hence, $3,499.

There's also a simpler argument: the price is irrelevant.

Apple is targeting very early adopters: bougie prosumers and power users with very low price sensitivity. These are folks who would pay $5,000 - $10,000 for the AVP. They just want the latest/greatest.

That said, even at this price point, the complaints remain overblown. Especially on a relative basis (both historically and currently).

Case in point is Apple's origins: the Lisa, one of the world’s first personal computers.

Similarly, this was the first time most consumers saw innovations like the modern GUI and the mouse; innovations that would shape the future of computing. Innovations that also begged similar questions… why does the average home need this? At the time, most consumers had no idea.

As a result, the Lisa was an abject commercial failure. But it paved the way for Apple's success with the Apple II and Mac. It also awakened the world to the potential of personal computing.

The Lisa cost $10k in 1983. $29,400 in today's prices. Not to mention, the Macintosh, Apple's most iconic breakthrough, was $2,495 in 1985. That’s $7,000 in today's prices…

From a more local & relative perspective: the Magic Leap 2 AR headset is $3,299; the Microsoft Holo-Lens 2 is $3,500. The Varjo, the most direct comparison as a mixed reality pass through device... it's $7,100!

The AVP is right in the ball park at $3,499, and vastly superior on just about every dimension.

It's also worth considering what the AVP strives to replace: powerful workstations, laptops, and high-end displays. People in their target market spend $2k - $5k on nice workstations/laptops, and up to $2k - $3k on high end displays; all without blinking an eye.

The AVP can replace these products, and then do SO much more...

And so, I rhetorically ask... is the AVP really that expensive?

I don't want to wear something on my face (UI/UX)

My response will seem trite, but I think it will prove true.

This is a classic case of "don't knock it ‘till you try it."

I know, I know. I haven't even tried the AVP myself. But I've spoken with people who have: from grounded analysts to XR skeptics. They’ve all had a similar response, falling somewhere along the lines of...

“Holy shit”

"I felt like I had super powers"

"It was remarkable and exceeded my wildest expectations"

I'll have to circle back on this after I try it, but here’s my bet: the user experience is going to be so compelling that it trumps the awkwardness/friction of wearing something on your face.

At least in the contexts they've optimized for: productivity & visualization.

The input modality is said to be the most mesmerizing part, i.e., the eyes and hand tracking in lieu of a mouse, keyboard, or screen taps.

With the AVP, you just follow your instincts, using your eyes and subtle hand motions to control virtual objects, as if they are actually in the real world. It feels like you have magical powers and it just works. Your intuition is the controller.

Sure, the jury remains out until it ships. But this seems like the first AR/VR product that is just... buttery.

What the hell do I mean by buttery?

It comes from Nick Grossman’s 'butter thesis' (Nick is a partner at Union Square Ventures). The thesis describes product interactions & experiences that just absolutely nail it. What 'it' is exactly is hard to describe… but you know it when you see it. It's just frictionless: intuitive, smooth, and delightful.

AR/VR today is cool and novel. But I don’t think anyone would call it buttery. It’s plagued with all kinds of UI/UX paper cuts that make it very hard to do real work or consume content for hours on end.

As much as I love AR/VR, I'm still painfully aware of the brick on my face, constantly sliding off, noticeably heavy, hot, with non-intuitive controllers, etc.

Now, I'm sure the AVP will have its edges. All V1.0 products do. But fortunately ... this is Apple we're talking about.

Unlike other players in this space, most people will give Apple the benefit of the doubt.

More so than perhaps any other company, Apple knows how to make things desirable. Which is a key pillar of their strategy: social engineering. They’re going to make this thing cool and they have a plan to do so. One example is hyper personalization.

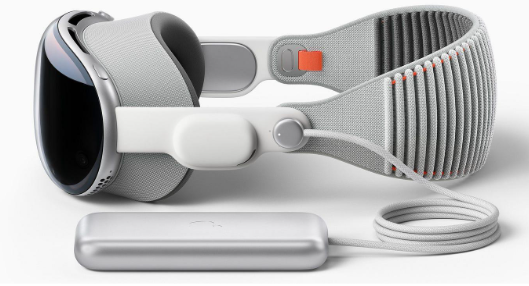

Apple is going to ensure your AVP fits like a glove, while also offering the opportunity for self-expression and style via custom aesthetics.

At first, you’ll have to make appointment at an Apple retail store to buy an AVP. They're carving out entire sections of the stores for headset demos and sizing, allowing associates to select and customize the right accessories for the buyer. This will ensure a snug fitting headband (of which there will be many styles), the perfect light seal (informed by a facial scan), and the right prescription lenses.

Considering the amount of inventory, the number of variations, the in-store logistics, the demos, etc… this will be the most complex retail roll out in Apple's history. A feat few beyond Apple could pull off, and a compelling story line to monitor through 2024.

What’s the point? What is this good for? (Use Cases)

Steve Jobs famously said, "You've got to start with the customer experience and work backwards to the technology. You can't start with technology and then try to figure out where to sell it."

Many people think the AVP flies in the face of this wisdom. They think this is fancy tech looking for a problem. I think the AVP strategy falls somewhere in the middle, largely because it has to; the form factor and UI/UX are just too new for anyone to have all the answers.

In Part I, I said the following: "In hindsight, it's easy to say the iPhone's impact was obvious at launch. But was it? Sure, it launched with what became killer apps: calls, email/messaging, browsing, and music. But, similar to the VisionPro's focus use cases, these things weren't entirely new. It was things we were already doing, just better on multiple vectors."

Similar to the iPhone strategy, Apple is starting with a simple and practical use case: screen replacement, aka: doing things some people are already doing, just better.

The use cases for 'infinite display' will be compelling for a lot of people: remote workers, digital nomads, software programmers, finance traders, data analysts, 3D artists/designers, gamers, movie buffs, the list goes on.

Virtual Displays

The total addressable market for these folks alone is in the tens of millions, if not hundreds of millions. Upon realizing they can become Tom Cruise from Minority Report, these people are going to line up in droves to buy the AVP.

Now, this use case doesn’t come without its haters, garnering comments along the lines of "ugh, but it’s so isolating". But this response feels silly to me. Isolation is the point. Many of these customers work remote from home, alone, and often on the road. What they do requires 'deep work' that is inherently isolating. If anything, the AVP is a device that could help close us off from endless distraction & interruption, allowing us to more easily tap into states of flow & ideal work conditions.

But if 'isolation' is your concern, know that collaboration will be the killer feature of spatial computing’s killer apps.

To be sure, it was odd that Apple barely showed 'multiplayer' use cases in their keynote. Quite odd. Collaboration is where the true magic happens. Particularly in AR, when you both have a completely shared context within both the physical and digital realms, bonded over a shared hallucination.

These 'shared hallucinations' are going to be most impactful within work settings.

Whether Apple likes it or not (because they don’t really care about enterprise), the enterprise will be their biggest/best opportunity short term, i.e., corporations buying the device for use cases like training, design, and sales & marketing.

Across these use cases today, even the world's most advanced companies are stuck in the 90s.

CAD designers create 3D things with 3D design tools, but go right back to 2D pictures in Powerpoint when it comes time to share/present.

Training departments use laughable videos, Power Points, and text filled PDFs with static pictures to explain complex, and sometimes dangerous procedures. And they wonder why they can’t recruit, inspire, and retain digitally native, 'experience craving' millennials/Gen Z…

Sales reps all too often take a similar approach. Their customers are better off just reading the same content online or getting a product analysis from ChatGPT

Across all of these examples, people are fundamentally trying to transfer knowledge by conveying an 'experience' in a woefully non-experiential way. This ‘knowledge transfer’ problem becomes increasingly acute in the face of an aging workforce, worker displacement (as AI eats more jobs), and within an era of customization/personalization in product design & sales.

This is why I prefer to call spatial computing, 'experiential computing'.

Within endless scenarios (be it work, education, or play) the goal is to capture, understand, or convey an 'experience' of some kind: what it's like to wear a shoe, what it's like to navigate a factory floor, what it's like to put your hands behind the wheel of the car.

We can try to use a bevy of words, images, and videos to spark imagination. And maybe imagination will get you 10-50% of the way there.

But what if we could turn imagination into reality? What if we can directly experience the thing itself, in its entirety? What if we could transfer knowledge at 80-100% levels of fidelity, without information loss?

Speaking of ‘direct experience’… Apple’s other focus use case might also be enough to sell out the AVP in year 1, and that’s immersive sports & live entertainment (e.g. any kind of live show/performance, music, plays, comedy acts, etc.)

They’re investing heavily in this area, with their own camera hardware for 360/volumetric capture, their own file format for this media type, and their own streaming platform via the acquisition of NextVR.

NextVR 360 camera

I thought NextVR was by far the most compelling consumer VR app to date. It was also a major driver of Oculus Quest sales, putting user’s court side of NBA games, on the sidelines of NFL games, or front row of a Taylor Swift concert (don’t judge).

These ‘real-world’ tickets cost anywhere from $1,000 to $10,000. Taylor rocks, and so does live sports, but I’m not paying that. Neither are 9/10 of people.

If you told me I could be court side, alongside friends from around the world, week in week out, for $3,499 and a small monthly subscription? I’m all over it. And I think many, many other people will feel the same way.

So as far as use cases are concerned, ‘infinite display’, collaboration + knowledge transfer, and live sports/entertainment alone will be enough to drive demand and establish product market fit.

But this is the tip of the iceberg. Just like no one predicted the App Store, and the ensuing explosion of new apps, we can’t predict all the innovation that’s brewing amidst the long tail of Apple developers who are already diving into the AVP SDK & developer docs.

Out of the millions of apps in the app store, a healthy chunk is brain storming as we speak about what their apps could look/feel like in a spatial world. And I can’t wait to see the results…

This is going to ruin humanity!

I beg to differ.

To the contrary, spatial/experiential computing just might be a key ingredient to humanity’s salvation, especially with the advent of AI.

There’s a variety of philosophical and practical reasons why. I’ll just hit my two favorites.

Philosophically, consider all the complex and daunting problems we face in the world. Most of them lack answers, and in our search for solutions, it’s hard to say where to start.

But one place that is hard to refute, and that will certainly help us find the right answers/solutions, is better communication & collaboration; between employees, executive, & scientists. Between countries, companies, and local governments. Between political groups, their leaders, and their polarized constituents.

Poor communication & collaboration sits at the heart of all our issues, causing a lack of empathy, understanding, and ultimately, poor decision making, low alignment, and very little progress.

To illustrate the power of spatial computing for communication & collaboration, I fall back to a section from my essay, ‘How to Defend the Metaverse’.

It quotes one of the cyberspace/metaverse OG's: Terrence McKenna.

McKenna says, "Imagine if we could see what people actually meant when they spoke. It would be a form of telepathy. What kind of impact would this have on the world?"

McKenna goes on to describe language in a simple but eye-opening way, reflecting on how primitive language really is.

He says, "Language today is just small mouth noises, moving through space. Mere acoustical signals that require the consulting of a learned dictionary. This is not a very wideband form of communication. But with virtual/augmented realities, we'll have a true mirror of the mind. A form of telepathy that could dissolve boundaries, disagreement, conflict, and a lack of empathy in the world."

This form of ‘telepathy’… i.e. a higher bandwidth, more visiual form of communication, i.e. the ability to more directly see or experience an idea, an action, a potential future… this will not just benefit human to human communication, but also human to machine. Which brings us to my practical response du jour.

Practically, we need to consider how humans evolve and keep up in the age of AI.

We’re briskly moving from the age of information to the age of intelligence. But intelligence for whom?

Machines are inhaling all of human knowledge. As a result, every person and every company will have the ultimate companion; capable of producing all the answers, all the options, and all the insights…

How do we compete and remain relevant? Or perhaps better said… How do we become a valuable companion to AI in return?

Just like machines leveled up via transformers and neural nets, we too need better ways to consume, analyze, and ‘experience’ information. Especially the information AI’s produce, which will come in droves and a myriad of formats.

AI is going to produce answers, insights, and truth for all kinds of things: new ideas, products, stories, moments in time, scenarios, plans, and my personal favorite; all things that remain abstract and unseen by most; space, stars, planets, the deep sea, the deep forest, the inner workings of the human body & mind, the list goes on.

AI is going to reveal things previously mysterious, complex, and otherwise impossible to fully grasp.

As it does so… how can AI best communicate its findings back to humans? And how can we fully grok, parse through, and become fully empowered to act?

More often than not, our answer back to the AI is going to be, “don’t tell me, damnit, show me”.

Spatial computing will be the ultimate tool for helping AI’s ‘show’, and helping humans ‘know’, ushering in an age of ‘experience’ in tandem with the age of ‘intelligence’.

As a result, humans will be empowered to better remain in the loop; as the final decision maker, fully empowered to add the human touch and tweak the final outcome/output, in a way that only humans know how, i.e. through feeling, intuition, and empathy, a la this essay ‘How to find solace in the age of AI: don’t think, feel’

Tech vs. Tech

In closing, there is one more common concern within this realm that I admittedly don’t have the best answer to. At least not yet. And that is… once we’re ‘in the loop’ with AI, and spending more time ‘in the machine’ with spatial computing… how do we retain the best parts of humanity that are obviously negatively impacted by technology?

Things like our attention and mental health, or our physical movement, social skills, and time in nature.

My prediction is that we’re going to get increasingly good at using tech to combat tech.

Meaning… there are apps and tools that we can build to shape our relationship with tech, negate its afflictions, and build better habits & social connections.

Apple is already doing this today, and I thought it was one of the more compelling parts of the WWDC presentation. They showcased apps for journaling to aid with emotional awareness. Meditation for mindfulness. Fitness & outdoor hobbies of all types, with unique ways to measure, gamify, and socialize/connect with others, boosting motivation & consistency along the way.

I think this trend is going to accelerate over the coming years. It’s already a cottage industry, with startups such as TrippVR for meditation & mental health, and FitXR for VR fitness.

The AVP’s arrival is going to enhance and legitimize these use cases, and over time, shift people’s relationship with technology while reducing the afflictions born of abstracted, ‘flat computing’. Or the afflictions born of boxes tethered walls and TVs (aka: an Xbox or PS5). These current form factors are what keeps kids/people stuck inside, isolated, and socially inept.

In contrast… AR, in its ultimate form, will free kids from the confines of a screen and a living room with an outlet, thrusting them back into nature, back into face to face contact, and back into a world longed for by prior generations. A world of scratched knees from a treasure hunt in the park, of youthful pride from a fort forged in the woods, or of confidence from winning an argument while playing make believe in the backyard.

Except this time, the treasure becomes real, the forts become labyrinths, and the figments of make belief become not so make belief…

Thanks for taking the time to read Evan's essay. Let us know what you think about this perspective. And if you enjoyed this piece, don’t forget to check out more of his essays and subscribe over at MediumEnergy.io. Here are some of our personal favorites:

- Finding solace in the age of AI

- The Ultimate Promise of the Metaverse