On May 2nd, we hosted a webinar on the “AR Cloud” with an incredible panel moderated by Charlie Fink. and featuring presentations from Ori Inbar of Super Ventures and AWE, and pioneering startups working to enable the creation and population of the AR cloud including Anjanay Midha (Ubiquity6), Matt Miesnieks (6D.ai), Ghislan Fouodji (Selerio.io), and Ray DiCarlo & David (YouAR).

Perhaps the biggest shift the introduction of AR-enabled mobile phones brought is the use of the camera as the interface. But the camera needs something to detect, or "see". We have come to think of this geolocated content as "The AR Cloud". The implications of SLAM capable geolocated content are profound; the world will be painted with data. The technology to enable this dramatic development is in its infancy, although there are several promising startups tackling it right now. Some, like Ubiquity6 and YouAR, offer complete solutions, while others, such as 6D.ai offer key technologies that would enable developers to create their own apps.

The presentations explored key concepts around the AR cloud. Such as:

- The role of computer vision, AI, and sound.

- The function and form of the universal visual browser (how will it enable all AR content to be found and could existing browsers play a role).

- Will there be open standards (so enterprises and individuals can populate the AR cloud)?

- If a dozen developers painted data on a landmark, like the Golden Gate Bridge, how would a user sort through it?

- Will there be a Google for visual search? How would that work? How important are filters?

- What are the opportunities today for developers, enterprises, and individuals?

Q & A

How do you capture spatial data that is aligned to real world coordinates if GPS isn't accurate enough? What is the math behind it?

It's too hard to get into here. There is no single solution that covers all use cases. Doing some googling on "outdoor large scale localization" (add "gps denied environments" for more fun) will uncover a lot of papers and all the math you can handle.

In layman terms, the GPS system is used to get in the general area, then computer vision takes over. Either via AI recognition, and/or point cloud matching, the system determines the exact 3D coordinates of the phone relative to the real world (ground truth)

The trick is to handle cases where there is no pre-existing computer vision data to match against, and to make it work from all sorts of angles and lighting conditions, and with/without GPS. It's still a very active domain in computer vision research. - Matt Miesnieks

There are several CV solutions that localize devices within point clouds. The trick is to understand the relationship between one "localization" and another. Our approach involves positioning AR content and devices with coordinates tethered to multiple trackable physical features. As devices use our system, they calculate and compare relative positioning data between trackable features, generating measurements (and associated uncertainties). These trackable features get organized into emergent hierarchical groups based on those measurements. Our system then uses statistical methods to improve its confidence of relative position data based on additional measurements made between the trackable features organized into a group.

In plainer terms, imagine that you had a marker taped to a table to create a rudimentary form of persistent AR but that marker also knew the relative position of every other marker taped to every other table in the whole world. We call our version of this system LockAR. - Ray DiCarlo

How will ALL of these different AR Cloud providers work together? Are they all compatible?

Good question. Some of us are trying to figure out Open Standards, but honestly I think that's premature. We need to show value to the market that these enablers are valuable. The market is nascent and we are all working to grow the market. Interop just isn't a problem anyone has right now. Down the road, who knows. Some forms of data will probably be "open" and others proprietary, this will probably be use-case dependent, and we don't know the use-cases yet - Matt Miesnieks

We are in a great innovation period! The giants will buy up the companies they like, and leverage their network effects to push them into the consumer world. Compatibility will exist only when its value outweighs the profit of closed systems. We'll see. The decision to give away proprietary technology when you are ahead is a hard one. But often the right one, with a big enough vision and umpteen billions of dollars to help prop up AR Cloud SaaS models. - David

How will you handle point cloud data sets in mobile on existing 3G networks?

6D.ai does everything on device, as close to real-time as possible (inc generating point-clouds & meshes). We minimize the data upload & download. We are targeting wifi and LTE networks initially. 3G will work, but will be slower *unknown if too slow. - Matt Miesnieks

Clouds can be sparse; they do not have to be that "heavy". They will be cached, and load as a device gets within range. We can reduce the amount of polygons and limit the level of RGB fidelity — but it's all going to be better on 5G. 5G is coming soon, and its arrival will transform the AR Cloud into a mass media platform accessible by all, with infinite data, and crazy high bandwidth. - David & Ray from YOUAR

What is the threat you pose of one company controlling access to "the" AR Cloud. Doesn't that assume there can be only one? And isn't it the case that the number of potential AR clouds is infinite--just multiple layers over the same physical space?

This question confuses the enabling infrastructure and the content. There can be infinite content in one place. The enabling infrastructure is too early to tell how the market will emerge. It's unlikely that one company will control everything. Nearly all tech markets have a dominant leader and a strong #2, then lots of small players. The ARCloud market will eventually fit this model, but what services and products will that be, who knows, the term ARCloud is too broad right now. - Matt Miesnieks

We agree! There will be many disparate AR Clouds at first, each using their own CV methods to understand that physical space. At some point, protocols may be developed that make some clouds obsolete, and allow others to coexist. We incorporate otherwise incompatible CV localizations on a common map. Most likely, Apple, Google, and Microsoft will continue to develop in their separated, siloed ecosystems for a while. Everyone will be searching for a near-term solution to the table as we wait for the giants to open their store of feature sets for common use. --Ray & David from YOUAR

Why the ARCF? We all share the roads, why not share an AR Cloud? Wouldn’t we all be better off with a generally common one?

Different AR Clouds will begin to pop up; we must unify this somehow, or at least agree to index them together coherently.

Get in a room (or chat room) and reach out to everyone. Do simple things first, realize basic goals, test out the first collection of applications.

Sign up for our ARena SDK and use it as a way to populate the ARCF's persistent, global map! The ARCF holds a collection of dynamic and versatile ".6dof" files — openly available SLAM maps. These files are generated by providing end-users with a way to "scan" environments, saving the data in a way that other devices on our network can use to "see" the same space.

In the future how do we create experiences that don't care what device you are using?

6D.ai is working to solve this, we intend to support all major AR platforms & hardware. Partially this is also a factor of the creation tools being cross-platform (eg Unity) and the platforms being open (ie not Snap). - Matt Miesnieks

Our approach was to build an SDK (available soon), so developers can immediately start building AR applications in Unity with the ability to interact with each other on ARCore and ARKit enabled devices. A key goal was to mitigate complex 3D interactions, and to allow both devices to see AR content together in a common space. If developers would like to stay iOS or Android native, they can use our soon-to-be-available UberCV SDK, or other companies soon-to-come out equivalent which will enable you to be in a common space without using any particular backend solution.

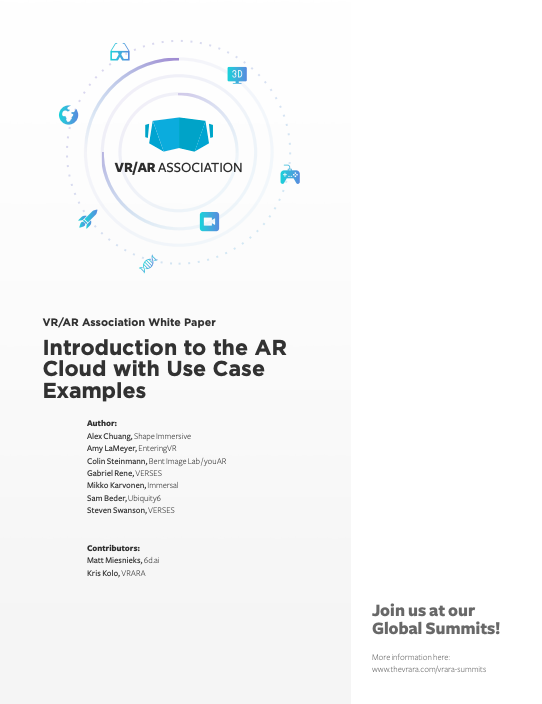

Here is an example from YOUAR