They also have Internet of Things (IoT) systems and grid modelling software, but it’s now just over a year ago that Siemens made a big announcement around their Xcelerator strategy and where they were looking to team up in a more structured way with many new and existing partners.

They created interoperability because everyone knows you can’t do it alone if you want to digitalise factories, grids, and industries. With the new system, you can mix and match with various partners. They also announced the Industrial Metaverse, a key strategic imperative for the company for the past 12 months.

In a big announcement, they revealed a month ago that they had invested about two billion dollars in a new factory in Singapore — a completely automated industrial metaverse factory.

They also announced a 500 million euro investment in Erlangen, just north of Nuremberg, one of their big campuses where they’re building a new technology research centre for automation, digital twins, and the industrial metaverse to take the technologies to the next level.

I’ve been collaborating with them and plan to visit their campus in Erlangen, where they opened the new Industrial Metaverse Experience Centre last week.

Over the last 12 months, we’ve seen them talk about the industrial metaverse along with NVIDIA, Nokia, and many other system integrators.

If you’re at Hanover Messe, everyone’s talking about digital twins and generative AI and how they can bring [the technologies] together to create what we’ve found regarding industry 4.0 for many years. That’s where it’s headed.

XR Today: What is the value of digital twins to industries and enterprises?

Kevin O’Donovan: Firstly, it depends on how you define a digital twin. Many people in the industry will say that they’re not new. At their basic level, a digital twin is a digital representation of something in the real world.

This could be a 3D computer-aided design (CAD) model, real-time data from my IoT systems, or a real-time data graphical user interface that’s a digital twin for my current production.

We’re seeing that, given the advancements in core technologies, whether from Intel, AMD, ARM, NVIDIA, and others, require more and more graphics, AI, and compute capabilities. This takes more data from the real world and digital twins by pooling data from multiple silos across different applications, and they don’t talk to each other.

We’re also seeing the next generation of digital twin technology, and many companies are adding more and more to boost simulation capabilities, generative AI to generate synthetic data for more simulations and scenario planning, and getting more data from real-time IoT systems.

However, we’re starting to see data pooled from multiple siloed digital twins, and that’s what platforms like NVIDIA’s Omniverse, Siemens, Bentley iCloud, and others are doing with many of their partners that we need to pool data from those different sources.

You then get this next-generation digital twin that offers a holistic view of everything in a digital format with 3D spatial interfaces.

These can perform scenario planning for business resiliency, maintenance, and optimisation for grids, factories, product designs, and recycling. It’s like digitisation on steroids.

Additionally, in our world, we like coming up with new terms. We had embedded business for many years, and didn’t call it the industrial IoT (IIoT), and now the Metaverse is the next game in town, where digital twins are the foundational building block.

You then speak about terms like XR, VR, AR, generative AI, 5G networks, and the latest edge computing from Intel, AMD, and NVIDIA. After bringing all of this together, we’re now at a stage where we’re taking digital twins to the next level.

I often tell people, “Look, digital twins mean different things to different people.” Great things are taking shape at the Digital Twin Consortium, where people can see digital twin maturity models.

These frameworks allow people to determine what digital twins mean, which exist today, and what problems they solve. As cool as the technology is, we must see if it makes you more money, saves money, or increases efficiency.

XR Today: There are a lot of use cases developing for digital twins, namely for companies like NVIDIA, Unity, Unreal Engine, and GE Digital. How are they being implemented in real use cases?

Kevin O’Donovan: People may say that digital twins aren’t new. We’re taking them to the next level now that we have a platform and immersive experiences.

This doesn’t always mean you’re in VR or XR, but you could instead view a 3D model of your factory [and] see what’s going on. This can allow you to reconfigure things and determine if you can boost production based on real-time data from the current production line.

We can also see if anything will break, if new shifts are needed, or if systems require predictive maintenance before speeding up production.

Conversely, two of us could be in different parts of the world and collaborate in the same environment. We’re not looking at two SAP screens but are actually in immersive environments.

It’s also not like a Zoom or Teams call anymore. We’ve recorded data for years that stuff sticks as we live in an immersive world if you’re trained in immersive ways.

So, as long as we use these technologies from Industry 4.0—the industrial metaverse—we can stay competitive as a company, industry, or country. Where we’re headed with automation, design, virtual worlds, and other things can also add to your sustainability story.

All the new infrastructure is being built for our [sustainable] energy transition, whether with electric vehicle (EV) factories, planning new grids, wind farms, hydrogen plants, and carbon capture plants. Everything is now being done in a digital twin model so they can plan everything before physically building infrastructure.

However, if you’re at an existing factory and have equipment from the last 10 to 15 years, your first step on that digital transformation journey is to put in all the IoT equipment to record real-time data in order to measure predictive maintenance.

That’s the journey we’re all on. It’s fascinating times, [and] people should not ignore this stuff.

XR Today: What did you think of PriceWaterhouseCoopers’ Four Pillars to the Metaverse? Do they resonate with how the industrial metaverse is developing?

Kevin O’Donovan: PwC’s four pillars—employee experience, training, client experience, and metaverse adoption—are key performance indicators (KPIs). Anybody in the industry wanting to invest will ask about the return on investment (ROI) level.

This can happen with happier employees, better collaboration with metaverse technologies, and other metrics. However, if you go to other companies, they may use other methodologies regarding digitalisation, with different ways to measure the success of digitalisation projects in your company, city, or country.

These KPIs allow people to know what success looks like and with the same goals. [However], if it doesn’t help client or employee experiences, people must consider why they use it.

Such frameworks are key. We’ve seen that, in the industry, people install technologies because they’re ‘cool.’ They have to have an ROI, and that’s one of the key drivers for why the industrial metaverse is not going away.

Digitalisation will become the only game in town, leading to better digital twins, resiliency, simulation capabilities, and ‘what if’ scenarios—all in real-time.

XR Today: How have digital twins and the industrial metaverse evolved over the years to improve infrastructure?

Kevin O’Donovan: I often chat with people in the industry, and they say, “Haven’t we been doing that for years? We don’t just build wind farms and hope they’ll work.” I agree with this.

There’s a lot of experience, competence, Excel models, and simulations that go into these projects. How do you put the mooring lines for offshore, floating wind turbines?

In the past, we didn’t have the computing, algorithms, or AI to generate more synthetic data and just run with it. Previously, we’d run ten simulations, some of which were paper-based.

Now, you can run hundreds of thousands of simulations. Using these simulations, we can now determine ‘what if’ scenarios like the tide, temperature, and climate changes—that ‘one in a hundred-year storm.

Almost every utility on the planet uses software to design distribution grids, allowing engineers to simulate what happens if another ten people plug in their electric vehicles, its effects on substations, and other issues.

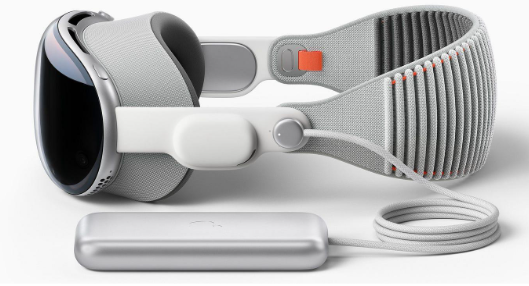

This stuff is happening, and we can’t ignore it, especially the efforts from PwC, Siemens, NVIDIA, Nokia, and many others. While we talk about the Apple Vision Pro, Meta Quest Pro and 3, and [metaverse platforms like] Decentraland, the real story is happening in the industry and enterprise.

Keep an eye on it because it’s not going away.