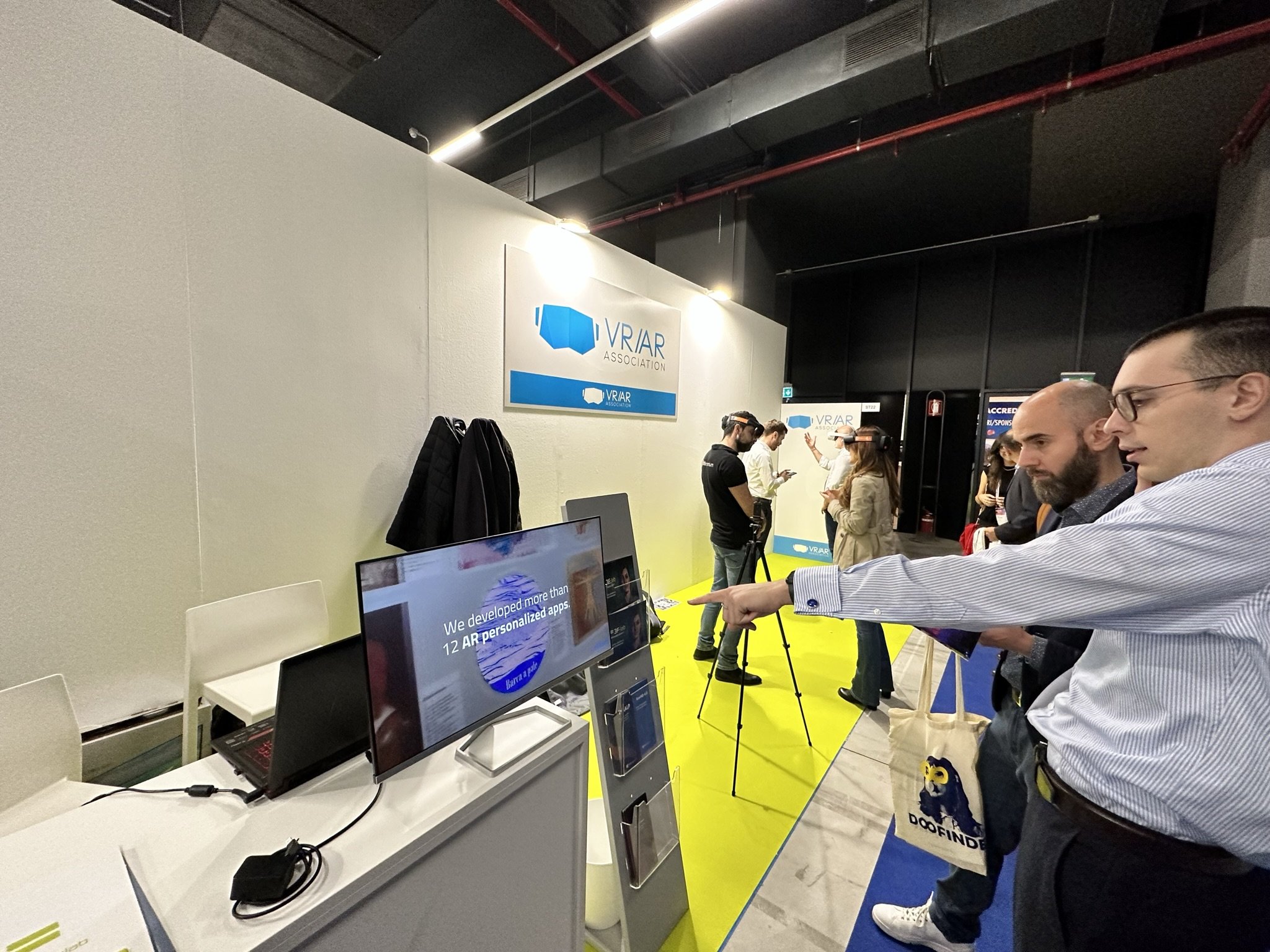

The VR/AR Association held a VR Enterprise and Training Forum yesterday, May 24. The one-day event hosted on the Hopin remote conference platform, brought together a number of industry experts to discuss the business applications of a number of XR techniques and topics including digital twins, virtual humans, and generative AI.

The VR/AR Association Gives Enterprise the Mic

The VR/AR Association hosted the event. In addition to keynotes, talks, and panel discussions, the event included opportunities for networking with other remote attendees.

“Our community is at the heart of what we do: we spark innovation and we start trends,” said VR/AR Association Enterprise Committee Co-Chair, Cindy Mallory, during a welcome session.

While there were some bonafide “technologists” in the panels, most speakers were people using the technology in industry themselves. While hearing from “the usual suspects” is nice, VR/AR Association fora are rare opportunities for industry professionals to hear from one another on how they approach problems and solutions in a rapidly changing workplace.

“I feel like there are no wrong answers,” VR/AR Association Training Committee Co-Chair,Bobby Carlton,said during the welcome session. “We’re all explorers asking where these tools fit in and how they apply.”

The Convergence

One of the reasons that the workplace is changing so rapidly has to do with not only the pace with which technologies are changing, but with the pace with which they are becoming reliant on one another. This is a trend that a number of commentators have labeled “the convergence.”

“When we talk about the convergence, we’re talking about XR but we’re also talking about computer vision and AI,” CGS Inc President of Enterprise Learning and XR, Doug Stephen, said in the keynote that opened the event, “How Integrated XR Is Creating a Connected Workplace and Driving Digital Transformation.”

CGS Australia Head, Adam Shah, was also a speaker. Together the pair discussed how using XR with advanced IT strategies, AI, and other emerging technologies creates opportunities as well as confusion for enterprise. Both commented that companies can only seize the opportunities provided by these emerging technologies through ongoing education.

“When you put all of these technologies together, it becomes harder for companies to get started on this journey,” said Shah. “Learning is the goal at the end of the day, so we ask ‘What learning outcomes do you want to achieve?’ and we work backwards from there.”

The convergence isn’t only changing how business is done, it’s changing who’s doing what. That was much of the topic of the panel discussion “What Problem Are You Trying to Solve For Your Customer? How Can Generative AI and XR Help Solve It? Faster, Cheaper, Better!”

“Things are becoming more dialectical between producers and consumers, or that line is melting where consumers can create whatever they want,” said Virtual World Society Executive Director Angelina Dayton. “We exist as both creators and as consumers … We see that more and more now.”

“The Journey” of Emerging Technology

The figure of “the journey” was also used by Overlay founder and CEO, Christopher Morace, in his keynote “Asset Vision – Using AI Models and VR to get more out of Digital Twins.” Morace stressed that we have to talk about the journey because a number of the benefits that the average user wants from these emerging technologies still aren’t practical or possible.

“The interesting thing about our space is that we see this amazing future and all of these visionaries want to start at the end,” said Morace. “How do we take people along on this journey to get to where we all want to be while still making the most out of the technology that we have today?”

Morace specifically cited ads by Meta showing software that barely exists running on hardware that’s still a few years away (though other XR companies have been guilty of this as well). The good news is that extremely practical XR technologies do exist today, including for enterprise – we just need to accept that they’re on mobile devices and tablets right now.

Digital Twins and Virtual Humans

We might first think of digital twins of places or objects – and that’s how Morace was speaking of them. However, there are also digital twins of people. Claire Hedgespeth, Head of Production and Marketing at Avatar Dimension, addressed its opportunities and obstacles in her talk, “Business of Virtual Humans.”

“The biggest obstacle for most people is the cost. … Right now, 2D videos are deemed sufficient for most outlets but I do feel that we’re missing an opportunity,” said Hedgespeth. “The potential for using virtual humans is only as limited as your imagination.”

The language of digital twins was also used on a global scale by AR Mavericks founder and CEO, William Wallace, in his talk “Augmented Reality and the Built World.” Wallace presented a combination of AR, advanced networks, and virtual positioning coming together to create an application layer he calls “The Tagisphere.”

“We can figure out where a person is so we can match them to the assets that are near them,” said Wallace. “It’s like a 3D model that you can access on your desktop, but we can bring it into the real world.”

It may sound a lot like the metaverse to some, but that word is out of fashion at the moment.

And the Destination Is … The Metaverse?

“We rarely use the M-word. We’re really not using it at all right now,” Qualcomm’s XR Senior Director, Martin Herdina, said in his talk “Spaces Enabling the Next Generation of Enterprise MR Experiences.”

Herdina put extra emphasis on computing advancements like cloud computing over the usual discussions of visual experience and form factor in his discussion of immersive technology. He also presented modern AR as a stepping stone to a largely MR future for enterprise.

“We see MR being a total game changer,” said Herdina. “Companies who have developed AR, who have tested those waters and built experience in that space, they will be first in line to succeed.”

VR/AR Association Co-Chair, Mark Gröb, expressed similar sentiments regarding “the M-word” in his VRARA Enterprise Committee Summary, which closed out the event.

“Enterprise VR had a reality check,” said Gröb. “The metaverse really was a false start. The hype redirected to AI-generated tools may or may not be a bad thing.”

Gröb further commented that people in the business of immersive technology specifically may be better able to get back to business with some of that outside attention drawn toward other things.

“Now we’re focusing on the more important thing, which was XR training,” said Gröb. “All of the business cases that we talked about today, it’s about consistent training.”

Business as Usual in the VR/AR Association

There has been a lot of discussion recently regarding “the death of the metaverse” – a topic which, arguably, hadn’t yet been born in the first place. Whether it was always just a gas and the extent to which that gas has been entirely replaced by AI is yet to be seen.

While there were people talking about “the enterprise metaverse” – particularly referring to things like remote collaboration solutions – the metaverse is arguably more of a social technology anyway. While enterprise does enterprise, someone else will build the metaverse (or whatever we end up calling it) – and they’ll probably come from within the VR/AR Association as well.

Post originally appearing on Arpost.co by Jon Joehnig.